Introduction

In today’s rapidly evolving technological landscape, artificial intelligence has emerged as a transformative force across industries. At the forefront of this revolution is OpenAI, whose powerful API has democratized access to cutting-edge AI capabilities for developers worldwide. OpenAI’s API serves as a bridge between sophisticated AI models and practical applications, enabling developers to integrate advanced natural language processing, image generation, and other AI functionalities into their projects with unprecedented ease.

This comprehensive guide aims to walk you through the fundamentals of OpenAI’s API, from understanding its core capabilities to implementing your first API calls and beyond. Whether you’re a seasoned developer looking to expand your toolkit or a newcomer eager to explore the possibilities of AI integration, this guide will provide you with the knowledge and practical skills needed to harness the full potential of OpenAI’s technology.

The journey into AI development represents more than just learning a new skill—it’s about unlocking new possibilities for innovation and problem-solving. As we like to say at Ai Mind Center, it’s time to “Unlock Your Potential With AI.” By mastering OpenAI’s API, you’ll gain access to tools that can elevate your projects, streamline complex processes, and open doors to creative solutions that were previously unimaginable.

Throughout this guide, we’ll emphasize practical applications and real-world examples, ensuring that you not only understand the theoretical aspects but also gain hands-on experience with implementing OpenAI’s API in your own projects. Let’s embark on this journey together and discover how OpenAI’s API can revolutionize your development workflow.

Understanding OpenAI’s API

Before diving into the implementation details, it’s essential to understand what an API is and specifically what makes OpenAI’s API unique. API stands for Application Programming Interface, which acts as an intermediary allowing different software applications to communicate with each other. In simpler terms, an API is a set of rules and protocols that define how software components should interact, enabling developers to access specific features or data from another application or service without needing to understand its internal workings.

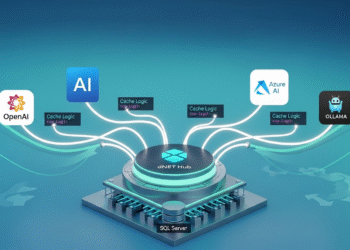

OpenAI’s API provides programmatic access to OpenAI’s suite of powerful AI models, including the GPT (Generative Pre-trained Transformer) family, DALL-E for image generation, and other specialized models. This interface allows developers to send requests to these models and receive AI-generated outputs that can be integrated into various applications, websites, or services.

Key Features and Capabilities

OpenAI’s API offers several groundbreaking capabilities that have revolutionized AI accessibility:

1. Natural Language Processing The API excels at understanding and generating human-like text, making it versatile for applications ranging from content creation and summarization to translation and conversation. The models can comprehend context, maintain coherence across long passages, and generate responses that are contextually relevant and nuanced.

2. Customization Options Developers can fine-tune the behavior of the AI models through adjustable parameters like temperature (controlling randomness), max tokens (limiting response length), and presence penalties (reducing repetition). These customization options allow for tailored responses specific to your application’s needs.

3. Multimodal Capabilities Beyond text processing, OpenAI’s newer models can work with multiple types of data simultaneously. For example, GPT-4o and GPT-4.1 can analyze images and respond to questions about them, while DALL-E can generate original images from text descriptions.

4. Versioned Models The API provides access to different versions of AI models, each with its own strengths and performance characteristics. This allows developers to choose the model that best fits their specific requirements, balancing factors like accuracy, speed, and cost.

5. Robust Documentation and Support OpenAI provides extensive documentation, tutorials, and community forums to help developers implement and troubleshoot their API integrations effectively.

6. Safety Features Built-in content filtering and moderation tools help ensure that the AI-generated outputs adhere to ethical guidelines and appropriate use policies.

Understanding these capabilities is crucial for developers looking to leverage OpenAI’s API effectively. The API’s versatility makes it applicable across diverse domains, from customer service chatbots and content generation tools to educational applications and creative writing assistants. As we progress through this guide, you’ll gain deeper insights into how these features can be applied to solve real-world problems and enhance your applications.

Setting Up Your Environment

Before you can start making API calls to OpenAI, you’ll need to set up your development environment properly. This section provides a step-by-step guide to help you get started quickly and efficiently.

Creating an OpenAI Account

- Sign up for OpenAI:

- Navigate to OpenAI’s website.

- Click on the “Sign Up” button in the top-right corner.

- Follow the registration process by providing your email address and creating a password.

- Verify your email address through the confirmation link sent to your inbox.

- Access the API Dashboard:

- Once logged in, navigate to the API section or dashboard.

- This will be your central hub for managing API keys, monitoring usage, and accessing documentation.

Obtaining Your API Key

Your API key is a crucial credential that authenticates your requests to OpenAI’s services:

- Generate an API Key:

- In your OpenAI dashboard, look for the “API Keys” section.

- Click on “Create new API key” or a similar option.

- Give your API key a descriptive name (e.g., “Development Key” or “Project X”).

- Copy the generated key and store it securely. Remember, this key should be treated like a password!

- Security Best Practices:

- Never hardcode your API key directly into your application code.

- Use environment variables or secure secret management systems to store your key.

- Consider setting up usage limits in the OpenAI dashboard to prevent unexpected charges.

Installing Required Dependencies

Most developers interact with OpenAI’s API using their preferred programming language. Here, we’ll cover the setup process for Python, which has excellent library support:

- Set Up Python Environment (if not already installed):

- Download and install Python from python.org (Python 3.8 or newer recommended).

- Consider using a virtual environment to manage dependencies:

python -m venv openai-env source openai-env/bin/activate # On Windows: openai-env\Scripts\activate - Install the OpenAI Python Library:

pip install openai - Additional Useful Libraries:

pip install python-dotenv # For environment variable management pip install requests # For HTTP requests (already included in many setups)

Configure Your Environment

Create a simple structure for your project:

- Create a project directory:

mkdir openai-project cd openai-project - Set up environment variables: Create a

.envfile in your project directory:OPENAI_API_KEY=your_api_key_here - Create a basic script: Create a file named

setup_test.py:import os from dotenv import load_dotenv from openai import OpenAI # Load environment variables load_dotenv() # Initialize the OpenAI client client = OpenAI(api_key=os.getenv("OPENAI_API_KEY")) def test_connection(): try: # A simple API call to test connectivity response = client.chat.completions.create( model="gpt-4o", messages=[ {"role": "user", "content": "Hello, OpenAI!"} ], max_tokens=5 ) print("Connection successful!") print(f"Response: {response.choices[0].message.content.strip()}") return True except Exception as e: print(f"Connection failed with error: {e}") return False if __name__ == "__main__": test_connection() - Test your setup:

python setup_test.py

If everything is configured correctly, you should see a successful connection message and a brief response from the API.

Prerequisites for Effective API Usage

Before diving deeper into OpenAI’s API, ensure you have:

- Basic Programming Knowledge:

- Familiarity with your chosen programming language (Python recommended for beginners).

- Understanding of HTTP requests and JSON data structures.

- Development Tools:

- A code editor or IDE (like VSCode, PyCharm, or even a simple text editor).

- Command-line interface comfort for running scripts and installing packages.

- API Documentation Access:

- Bookmark OpenAI’s API Reference for quick access.

- Join the OpenAI community forum for support if needed.

- Billing Setup (for production use):

- Add a payment method to your OpenAI account.

- Set up usage alerts to monitor costs.

- Understand the pricing structure for different models.

With your environment properly configured and prerequisites checked, you’re now ready to make your first API call to OpenAI and begin exploring its capabilities.

Making Your First API Call

Now that your environment is set up, it’s time to make your first API call to OpenAI. This section will walk you through creating a simple application that leverages the power of OpenAI’s language models, with clear explanations of each step and component.

Understanding the Basic Structure of an API Call

An OpenAI API call typically consists of the following components:

- Authentication: Your API key that proves you have permission to access the service.

- Endpoint Selection: The specific API endpoint you’re targeting (e.g., chat completions, responses, images).

- Model Selection: Choosing which AI model to use for your task.

- Parameters: Configuration options that control how the model processes your request.

- Prompt/Input: The content you’re sending to the model for processing.

Creating a Simple Text Completion Example

Let’s create a basic script that generates text completions based on a prompt. OpenAI offers two main interfaces for text generation: the newer Responses API and the traditional Chat Completions API. We’ll demonstrate both:

import os

from dotenv import load_dotenv

from openai import OpenAI

# Load environment variables

load_dotenv()

# Initialize the OpenAI client

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

def generate_text_responses_api(prompt, max_tokens=150):

"""

Generate text based on a prompt using OpenAI's Responses API.

Args:

prompt (str): The input text to generate from

max_tokens (int): Maximum length of generated text

Returns:

str: The generated text

"""

try:

# Make the API call using the Responses API

response = client.responses.create(

model="gpt-4o", # Using GPT-4o model

input=prompt,

max_tokens=max_tokens,

temperature=0.7 # Controls randomness: lower is more deterministic

)

# Extract and return the generated text

return response.output_text

except Exception as e:

return f"Error: {str(e)}"

def generate_text_chat_api(prompt, max_tokens=150):

"""

Generate text based on a prompt using OpenAI's Chat Completions API.

Args:

prompt (str): The input text to generate from

max_tokens (int): Maximum length of generated text

Returns:

str: The generated text

"""

try:

# Make the API call using the Chat Completions API

response = client.chat.completions.create(

model="gpt-4o", # Using GPT-4o model

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

],

max_tokens=max_tokens,

temperature=0.7, # Controls randomness: lower is more deterministic

top_p=1.0, # Alternative to temperature for nucleus sampling

frequency_penalty=0.0, # Reduces repetition of token sequences

presence_penalty=0.0 # Reduces repetition of topics

)

# Extract and return the generated text

return response.choices[0].message.content

except Exception as e:

return f"Error: {str(e)}"

# Example usage

if __name__ == "__main__":

user_prompt = input("Enter a prompt for the AI: ")

# Using the Responses API (newer, recommended for most use cases)

print("\nGenerated Response (Responses API):")

responses_api_text = generate_text_responses_api(user_prompt)

print(responses_api_text)

# Using the Chat Completions API (traditional approach)

print("\nGenerated Response (Chat Completions API):")

chat_api_text = generate_text_chat_api(user_prompt)

print(chat_api_text)

Understanding the Parameters

Let’s break down the key parameters used in the API calls:

- model: Specifies which model to use. “gpt-4o” is one of OpenAI’s powerful models that offers a good balance of capability and cost. Other options include the GPT-4.1 family of models (specialized for coding tasks) and GPT-3.5-turbo for faster, more economical processing.

- messages (Chat Completions API): For the chat completion endpoint, we provide a list of message objects. Each message has a “role” (system, user, or assistant) and “content” (the actual text).

- input (Responses API): For the newer responses endpoint, we provide a single input string which contains the user’s query.

- max_tokens: Controls the maximum length of the generated text. Each token is roughly 4 characters or 3/4 of a word in English.

- temperature: Affects the randomness of the output. Higher values (closer to 1.0) make the output more random, while lower values make it more focused and deterministic.

- top_p: An alternative to temperature, using nucleus sampling. It selects from the smallest possible set of tokens whose cumulative probability exceeds the probability p.

- frequency_penalty and presence_penalty: These parameters help reduce repetition in the generated text. Frequency penalty discourages the model from repeating the same tokens, while presence penalty discourages it from repeating the same topics.

Interpreting the Response

When you make an API call to OpenAI, the response object contains several important pieces of information:

- output_text (Responses API): The actual generated text from the model.

- choices (Chat Completions API): An array containing the generated outputs. For most basic calls, you’ll only have one item in this array (index 0).

- message (Chat Completions API): When using the chat endpoint, each choice contains a message object with the AI’s response.

- content (Chat Completions API): The actual generated text from the model.

- finish_reason: Indicates why the model stopped generating text (e.g., “stop” means it completed naturally, “length” means it hit the token limit).

- usage: Information about token usage for billing purposes, including:

- prompt_tokens: Number of tokens in your input

- completion_tokens: Number of tokens in the model’s response

- total_tokens: Sum of prompt and completion tokens

Expanding Our Example: A Simple Conversational Agent

Let’s extend our example to maintain a conversation history using the Chat Completions API:

import os

from dotenv import load_dotenv

from openai import OpenAI

# Load environment variables

load_dotenv()

# Initialize the OpenAI client

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

def chat_with_ai():

"""Simple conversation loop with the OpenAI API"""

print("Welcome to the OpenAI Conversation Bot! (Type 'exit' to quit)")

# Initialize conversation history

conversation = [

{"role": "system", "content": "You are a helpful assistant."}

]

while True:

# Get user input

user_input = input("\nYou: ")

# Check if user wants to exit

if user_input.lower() in ['exit', 'quit', 'bye']:

print("Goodbye!")

break

# Add user message to conversation

conversation.append({"role": "user", "content": user_input})

try:

# Call the API

response = client.chat.completions.create(

model="gpt-4o",

messages=conversation,

max_tokens=150

)

# Get assistant's reply

assistant_reply = response.choices[0].message.content

# Print the reply

print(f"\nAssistant: {assistant_reply}")

# Add assistant's reply to conversation history

conversation.append({"role": "assistant", "content": assistant_reply})

except Exception as e:

print(f"Error: {str(e)}")

if __name__ == "__main__":

chat_with_ai()

This example demonstrates how to build a simple conversational agent that maintains context across multiple turns of dialogue. By appending each message to the conversation history, the model can reference previous exchanges, resulting in more coherent and contextually appropriate responses.

Using the Newer Responses API for Conversations

For comparison, here’s how you could implement a similar conversational agent using the newer Responses API:

import os

from dotenv import load_dotenv

from openai import OpenAI

# Load environment variables

load_dotenv()

# Initialize the OpenAI client

client = OpenAI(api_key=os.getenv("OPENAI_API_KEY"))

def chat_with_ai_responses():

"""Simple conversation loop with the OpenAI Responses API"""

print("Welcome to the OpenAI Conversation Bot (Responses API)! (Type 'exit' to quit)")

# Initialize conversation history for tracking

conversation_history = ""

while True:

# Get user input

user_input = input("\nYou: ")

# Check if user wants to exit

if user_input.lower() in ['exit', 'quit', 'bye']:

print("Goodbye!")

break

# Add user input to conversation history

if conversation_history:

conversation_history += f"\n\nUser: {user_input}"

else:

conversation_history = f"User: {user_input}"

try:

# Call the API with conversation context

response = client.responses.create(

model="gpt-4o",

input=conversation_history,

instructions="You are a helpful assistant. Respond directly to the user's latest message.",

max_tokens=150

)

# Get assistant's reply

assistant_reply = response.output_text

# Print the reply

print(f"\nAssistant: {assistant_reply}")

# Add assistant's reply to conversation history

conversation_history += f"\n\nAssistant: {assistant_reply}"

except Exception as e:

print(f"Error: {str(e)}")

if __name__ == "__main__":

chat_with_ai_responses()

With these examples and explanations, you should now have a solid understanding of how to make basic API calls to OpenAI and interpret the results. In the next section, we’ll explore best practices for using the API effectively and responsibly.

Best Practices for Using OpenAI’s API

Implementing OpenAI’s API effectively requires more than just technical knowledge. It involves adhering to ethical guidelines, optimizing performance, and managing costs efficiently. This section outlines key best practices to help you maximize the value of your API integration while using it responsibly.

Ethical Considerations and Responsible AI Use

1. Content Moderation

- Implement content filtering on both input and output to prevent misuse of the API.

- Consider using OpenAI’s Moderation API to automatically flag potentially harmful content:

def check_content_safety(text):

"""Check if content complies with usage policies"""

try:

response = client.moderations.create(input=text)

return not response.results[0].flagged

except Exception as e:

print(f"Moderation error: {e}")

return False

# Example usage

user_input = input("Enter text: ")

if check_content_safety(user_input):

# Process the input

response = generate_text_responses_api(user_input)

else:

print("Input contains potentially harmful content")

2. Transparency with Users

- Clearly disclose when content is AI-generated.

- Provide mechanisms for users to report problematic outputs.

- Be transparent about the capabilities and limitations of AI-generated content.

3. Bias Mitigation

- Be aware that AI models may reflect biases present in their training data.

- Regularly audit your application’s outputs for potential biases.

- Consider implementing human review processes for sensitive use cases.

4. Privacy Protection

- Avoid sending personally identifiable information (PII) through the API when possible.

- Implement data retention policies that align with privacy regulations.

- Consider the privacy implications of storing conversation histories.

Optimizing API Calls for Performance and Cost

1. Model Selection

- Choose the appropriate model for your task:

- For coding tasks and accuracy-critical applications, use “gpt-4.1” or “gpt-4o”.

- For lower-cost applications with reasonable performance, use “gpt-4.1-mini” or “gpt-4o-mini”.

- For highest speed and lowest cost, consider “gpt-4.1-nano” or “gpt-3.5-turbo”.

2. Token Optimization

- Remember that you pay for both input and output tokens.

- Keep prompts concise while maintaining necessary context.

- Use system messages efficiently to set context rather than repeating instructions in each user message.

- Consider token usage in your conversation history management:

def truncate_conversation(conversation, max_tokens=3000):

"""Truncate conversation history to stay within token limits"""

# Simple implementation - in production, you might want more sophisticated methods

truncated = [conversation[0]] # Keep the system message

token_count = len(conversation[0]["content"].split())

# Add messages from the end (most recent) until we approach the limit

for message in reversed(conversation[1:]):

message_tokens = len(message["content"].split())

if token_count + message_tokens < max_tokens:

truncated.insert(1, message) # Insert after system message

token_count += message_tokens

else:

break

return truncated

3. Intelligent Caching

- Cache common responses to reduce API calls:

import hashlib

import json

import pickle

from functools import lru_cache

@lru_cache(maxsize=100)

def cached_completion(prompt_hash):

# Retrieve the actual prompt from a lookup table or database

# And then make the API call

pass

def generate_text_with_cache(prompt, **params):

# Create a unique hash based on the prompt and parameters

param_string = json.dumps(params, sort_keys=True)

prompt_hash = hashlib.md5(f"{prompt}{param_string}".encode()).hexdigest()

return cached_completion(prompt_hash)

4. Batch Processing

- When possible, batch requests instead of making many individual API calls.

- Consider asynchronous processing for non-interactive applications:

import asyncio

from openai import AsyncOpenAI

async def process_prompts_batch(prompts):

client = AsyncOpenAI(api_key=os.getenv("OPENAI_API_KEY"))

tasks = []

for prompt in prompts:

tasks.append(

client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": prompt}],

max_tokens=100

)

)

return await asyncio.gather(*tasks)

5. Implement Retry Logic

- Handle rate limits and transient errors gracefully:

import time

import backoff # pip install backoff

@backoff.on_exception(

backoff.expo,

(Exception), # You might want to be more specific about which exceptions to retry

max_tries=5

)

def reliable_completion(prompt):

try:

return client.chat.completions.create(

model="gpt-4o",

messages=[{"role": "user", "content": prompt}],

max_tokens=100

)

except Exception as e:

print(f"Error: {e}")

raise

6. Monitor Usage and Set Limits

- Implement usage tracking in your application:

def track_token_usage(response, user_id=None):

"""Track token usage for monitoring and budgeting"""

prompt_tokens = response.usage.prompt_tokens

completion_tokens = response.usage.completion_tokens

total_tokens = response.usage.total_tokens

# In a real app, you would store this in a database

print(f"Usage - Prompt: {prompt_tokens}, Completion: {completion_tokens}, Total: {total_tokens}")

# You could implement budget limits here

# if user_has_exceeded_budget(user_id, total_tokens):

# send_alert_or_disable_access()

By implementing these best practices, you’ll not only create more efficient and cost-effective applications but also ensure that your use of AI technology is responsible and aligned with ethical standards. In the next section, we’ll explore common use cases where OpenAI’s API can provide significant value across different industries.

Common Use Cases

OpenAI’s API has become a versatile tool for developers across various industries, powering innovative solutions to complex problems. This section explores practical applications of the API, demonstrating its flexibility and potential through real-world examples and implementation scenarios.

Content Creation and Enhancement

1. Automated Blog Post Generation

Content creators can leverage OpenAI’s API to generate first drafts or outlines of articles, significantly accelerating the writing process:

def generate_blog_outline(topic, sections=5):

prompt = f"""

Create a detailed outline for a blog post about {topic}.

Include an introduction, {sections} main sections with subsections, and a conclusion.

For each section, provide 2-3 bullet points of key information to include.

"""

response = client.responses.create(

model="gpt-4o",

input=prompt,

max_tokens=800,

temperature=0.7

)

return response.output_text

2. Content Repurposing

Convert content between different formats to maximize reach and engagement:

def convert_blog_to_social_posts(blog_content, platform="twitter", count=3):

prompt = f"""

Convert the following blog content into {count} engaging {platform} posts.

Each post should be self-contained and include relevant hashtags.

Blog content:

{blog_content}

"""

response = client.responses.create(

model="gpt-4o", # Using GPT-4o for more creative and nuanced social posts

input=prompt,

max_tokens=500,

temperature=0.8

)

return response.output_text

Customer Support Automation

1. Intelligent Query Routing

Analyze customer inquiries to route them to the appropriate department:

def categorize_support_ticket(ticket_text):

prompt = f"""

Categorize the following customer support ticket into exactly one of these categories:

- Technical Issue

- Billing Question

- Product Information

- Feature Request

- Complaint

Ticket: {ticket_text}

Category:

"""

response = client.responses.create(

model="gpt-4o",

input=prompt,

max_tokens=20,

temperature=0.3 # Lower temperature for more consistent categorization

)

return response.output_text.strip()

2. Response Generation for Common Queries

Generate personalized responses to frequently asked questions:

def generate_support_response(customer_name, query, product_details):

prompt = f"""

Generate a helpful, friendly customer support response to the following query about our product.

Customer Name: {customer_name}

Product: {product_details}

Customer Query: {query}

The response should:

- Address the customer by name

- Provide a clear and concise answer

- Include relevant product information

- End with an offer for additional help if needed

"""

response = client.responses.create(

model="gpt-4o",

input=prompt,

max_tokens=300,

temperature=0.7

)

return response.output_text

Data Analysis and Summarization

1. Report Summarization

Convert lengthy reports into concise summaries highlighting key information:

def summarize_financial_report(report_text):

prompt = f"""

Summarize the following financial report, highlighting:

1. Key performance indicators

2. Revenue changes

3. Major expenses

4. Profit margins

5. Notable trends

Report:

{report_text}

Provide a clear, structured summary that a busy executive could read in 2 minutes.

"""

response = client.responses.create(

model="gpt-4.1", # Using GPT-4.1 for better comprehension of complex financial data

input=prompt,

max_tokens=500,

temperature=0.4

)

return response.output_text

2. Data Interpretation

Help users understand complex data by providing natural language explanations:

def explain_data_trends(data_description, target_audience="business"):

audiences = {

"business": "a business executive without technical background",

"technical": "a data scientist or analyst",

"general": "someone with no specialized knowledge"

}

prompt = f"""

Explain the following data and its trends in simple terms for {audiences.get(target_audience, "someone without specialized knowledge")}:

{data_description}

Focus on practical implications and actionable insights.

"""

response = client.responses.create(

model="gpt-4o",

input=prompt,

max_tokens=400,

temperature=0.6

)

return response.output_text

Development and Coding Assistance

1. Code Generation and Debugging

With the powerful coding capabilities of GPT-4.1 models, developers can leverage the API for code generation and debugging:

def generate_code(task_description, language="python"):

prompt = f"""

Write {language} code to accomplish the following task:

{task_description}

Provide only the code without explanation.

"""

response = client.responses.create(

model="gpt-4.1", # Using GPT-4.1 for superior coding capabilities

input=prompt,

max_tokens=800,

temperature=0.2 # Lower temperature for more deterministic code generation

)

return response.output_text

def debug_code(code, error_message, language="python"):

prompt = f"""

Debug the following {language} code that's producing this error:

ERROR:

{error_message}

CODE:

{code}

Explain what's wrong and provide the corrected code.

"""

response = client.responses.create(

model="gpt-4.1",

input=prompt,

max_tokens=800,

temperature=0.3

)

return response.output_text

Real-World Case Study: Educational Platform Enhancement

Let’s examine how a hypothetical online learning platform, “LearnSpace,” integrated OpenAI’s API to enhance student learning experiences:

Challenge: LearnSpace wanted to provide personalized learning assistance to students without hiring a large team of tutors.

Solution: They implemented several AI-powered features using OpenAI’s API:

- Smart Study Guides: Generated customized study materials based on course content and student performance.

- Concept Explanations: Provided alternative explanations when students struggled with course material.

- Practice Question Generation: Created unique practice problems tailored to individual learning needs.

- Essay Feedback: Offered constructive feedback on student writing with specific improvement suggestions.

Implementation Approach:

def generate_personalized_explanation(concept, student_level, learning_style):

"""Generate personalized explanations based on student needs"""

prompt = f"""

Explain the concept of {concept} to a {student_level} level student

who learns best through {learning_style} examples.

Use appropriate analogies and examples.

Include 2-3 practice questions at the end.

"""

response = client.responses.create(

model="gpt-4o",

input=prompt,

max_tokens=800,

temperature=0.7

)

return response.output_text

Results:

- 85% of students reported better understanding of difficult concepts

- Average course completion rates increased by 23%

- Student satisfaction scores improved by 31%

This case study demonstrates how OpenAI’s API can transform educational experiences through personalization at scale, making quality education more accessible and effective.

These examples represent just a fraction of what’s possible with OpenAI’s API. As developers continue to explore its capabilities, we’re likely to see even more innovative applications across industries, from healthcare and finance to entertainment and beyond.

Conclusion

Throughout this guide, we’ve explored the multifaceted capabilities of OpenAI’s API and how it can be harnessed to create intelligent, responsive applications across various domains. From understanding the fundamental concepts and setting up your development environment to implementing practical examples and adhering to best practices, you now have a solid foundation for integrating AI into your projects.

OpenAI’s API represents more than just a technological tool—it’s a gateway to new possibilities in software development and user experience design. By providing developers with accessible means to incorporate advanced AI capabilities into their applications, OpenAI has democratized access to cutting-edge artificial intelligence, allowing innovators of all backgrounds to “Unlock Your Potential With AI.”

As you continue your journey with OpenAI’s API, remember that responsible implementation is as important as technical proficiency. Ethical considerations, performance optimization, and cost management should remain at the forefront of your development process. By balancing these factors, you’ll create applications that not only impress with their capabilities but also build trust with your users.

The examples and implementations we’ve covered are just starting points. We encourage you to experiment, combine different approaches, and push the boundaries of what’s possible with AI integration. Whether you’re building customer support systems, content creation tools, educational platforms, or something entirely new, OpenAI’s API offers the flexibility and power to bring your vision to life.

For more resources, comprehensive documentation, and the latest updates on available models and features, visit the official OpenAI website at https://openai.com. The AI landscape is evolving rapidly, and staying informed about new developments will help you maximize the value of your OpenAI API integration.

Stay Connected with Ai Mind Center

Want to stay at the cutting edge of AI development and implementation? Subscribe to our newsletter to receive:

- Regular updates on new AI capabilities and features

- Practical tutorials and code examples

- Case studies of successful AI implementations

- Expert insights on responsible AI development

- Exclusive access to webinars and online events

Join our community of forward-thinking developers and AI enthusiasts by entering your email below. Together, we’ll explore the limitless possibilities of artificial intelligence and continue to unlock new potential with AI.

Ai Mind Center – Unlock Your Potential With AI

This blog post was originally published on Ai Mind Center on May 6, 2025. For more articles on AI development, implementation strategies, and industry insights, browse our blog archive or contact us with your questions.

Не могу промолчать, по-настоящему отлично сделано!!!